參考資料:http://www.ruanyifeng.com/blog/2013/03/automatic_summarization.html

python和JAVA的區別? http://joshbohde.com/blog/document-summarization

1、介紹

?

java調用python? 1、本文自動文本摘要實現的依據就是詞頻統計

2、文章是由句子組成的,文章的信息都包含在句子中,有些句子包含的信息多,有些句子包含的信息少。

3、句子的信息量用"關鍵詞"來衡量。如果包含的關鍵詞越多,就說明這個句子越重要。

4、"自動摘要"就是要找出那些包含信息最多的句子,也就是包含關鍵字最多的句子

5、而通過統計句子中關鍵字的頻率的大小,進而進行排序,通過對排序的詞頻列表對文檔中句子逐個進行打分,進而把打分高的句子找出來,就是我們要的摘要。

?

2、實現步驟

1、加載停用詞

2、將文檔拆分成句子列表

3、將句子列表分詞

4、統計詞頻,取出100個最高的關鍵字

5、根據詞頻對句子列表進行打分

6、取出打分較高的前5個句子

3、原理

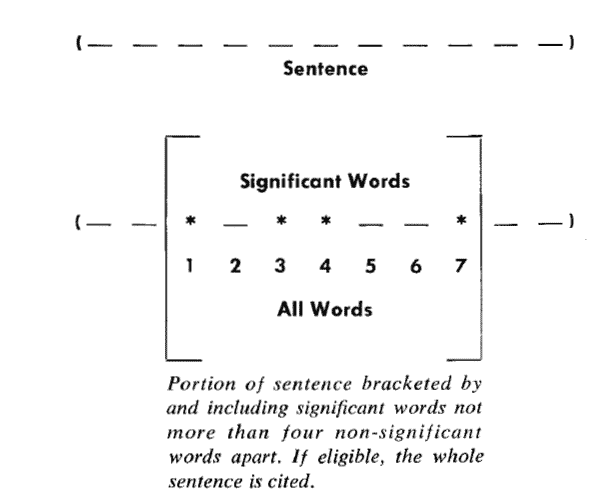

這種方法最早出自1958年的IBM公司科學家H.P. Luhn的論文《The Automatic Creation of Literature Abstracts》。Luhn提出用"簇"(cluster)表示關鍵詞的聚集。所謂"簇"就是包含多個關鍵詞的句子片段。

上圖就是Luhn原始論文的插圖,被框起來的部分就是一個"簇"。只要關鍵詞之間的距離小于"門檻值",它們就被認為處于同一個簇之中。Luhn建議的門檻值是4或5。

也就是說,如果兩個關鍵詞之間有5個以上的其他詞,就可以把這兩個關鍵詞分在兩個簇。

簇重要性分值計算公式:

以前圖為例,其中的簇一共有7個詞,其中4個是關鍵詞。因此,它的重要性分值等于 ( 4 x 4 ) / 7 = 2.3。

然后,找出包含分值最高的簇的句子(比如前5句),把它們合在一起,就構成了這篇文章的自動摘要

具體實現可以參見《Mining the Social Web: Analyzing Data from Facebook, Twitter, LinkedIn, and Other Social Media Sites》(O'Reilly, 2011)一書的第8章,python代碼見github。

?

4、相關代碼

python實現代碼:

#!/user/bin/python # coding:utf-8import nltk import numpy import jieba import codecs import os

class SummaryTxt:def __init__(self,stopwordspath):# 單詞數量self.N = 100# 單詞間的距離self.CLUSTER_THRESHOLD = 5# 返回的top n句子self.TOP_SENTENCES = 5self.stopwrods = {}#加載停用詞if os.path.exists(stopwordspath):stoplist = [line.strip() for line in codecs.open(stopwordspath, 'r', encoding='utf8').readlines()]self.stopwrods = {}.fromkeys(stoplist)def _split_sentences(self,texts):'''把texts拆分成單個句子,保存在列表里面,以(.!?。!?)這些標點作為拆分的意見,:param texts: 文本信息:return:'''splitstr = '.!?。!?'.decode('utf8')start = 0index = 0 # 每個字符的位置sentences = []for text in texts:if text in splitstr: # 檢查標點符號下一個字符是否還是標點sentences.append(texts[start:index + 1]) # 當前標點符號位置start = index + 1 # start標記到下一句的開頭index += 1if start < len(texts):sentences.append(texts[start:]) # 這是為了處理文本末尾沒有標return sentencesdef _score_sentences(self,sentences, topn_words):'''利用前N個關鍵字給句子打分:param sentences: 句子列表:param topn_words: 關鍵字列表:return:'''scores = []sentence_idx = -1for s in [list(jieba.cut(s)) for s in sentences]:sentence_idx += 1word_idx = []for w in topn_words:try:word_idx.append(s.index(w)) # 關鍵詞出現在該句子中的索引位置except ValueError: # w不在句子中password_idx.sort()if len(word_idx) == 0:continue# 對于兩個連續的單詞,利用單詞位置索引,通過距離閥值計算族clusters = []cluster = [word_idx[0]]i = 1while i < len(word_idx):if word_idx[i] - word_idx[i - 1] < self.CLUSTER_THRESHOLD:cluster.append(word_idx[i])else:clusters.append(cluster[:])cluster = [word_idx[i]]i += 1clusters.append(cluster)# 對每個族打分,每個族類的最大分數是對句子的打分max_cluster_score = 0for c in clusters:significant_words_in_cluster = len(c)total_words_in_cluster = c[-1] - c[0] + 1score = 1.0 * significant_words_in_cluster * significant_words_in_cluster / total_words_in_clusterif score > max_cluster_score:max_cluster_score = scorescores.append((sentence_idx, max_cluster_score))return scoresdef summaryScoredtxt(self,text):# 將文章分成句子sentences = self._split_sentences(text)# 生成分詞words = [w for sentence in sentences for w in jieba.cut(sentence) if w not in self.stopwrods iflen(w) > 1 and w != '\t']# words = []# for sentence in sentences:# for w in jieba.cut(sentence):# if w not in stopwords and len(w) > 1 and w != '\t':# words.append(w)# 統計詞頻wordfre = nltk.FreqDist(words)# 獲取詞頻最高的前N個詞topn_words = [w[0] for w in sorted(wordfre.items(), key=lambda d: d[1], reverse=True)][:self.N]# 根據最高的n個關鍵詞,給句子打分scored_sentences = self._score_sentences(sentences, topn_words)# 利用均值和標準差過濾非重要句子avg = numpy.mean([s[1] for s in scored_sentences]) # 均值std = numpy.std([s[1] for s in scored_sentences]) # 標準差summarySentences = []for (sent_idx, score) in scored_sentences:if score > (avg + 0.5 * std):summarySentences.append(sentences[sent_idx])print sentences[sent_idx]return summarySentencesdef summaryTopNtxt(self,text):# 將文章分成句子sentences = self._split_sentences(text)# 根據句子列表生成分詞列表words = [w for sentence in sentences for w in jieba.cut(sentence) if w not in self.stopwrods iflen(w) > 1 and w != '\t']# words = []# for sentence in sentences:# for w in jieba.cut(sentence):# if w not in stopwords and len(w) > 1 and w != '\t':# words.append(w)# 統計詞頻wordfre = nltk.FreqDist(words)# 獲取詞頻最高的前N個詞topn_words = [w[0] for w in sorted(wordfre.items(), key=lambda d: d[1], reverse=True)][:self.N]# 根據最高的n個關鍵詞,給句子打分scored_sentences = self._score_sentences(sentences, topn_words)top_n_scored = sorted(scored_sentences, key=lambda s: s[1])[-self.TOP_SENTENCES:]top_n_scored = sorted(top_n_scored, key=lambda s: s[0])summarySentences = []for (idx, score) in top_n_scored:print sentences[idx]summarySentences.append(sentences[idx])return sentencesif __name__=='__main__':obj =SummaryTxt('D:\work\Solr\solr-python\CNstopwords.txt')txt=u'十八大以來的五年,是黨和國家發展進程中極不平凡的五年。面對世界經濟復蘇乏力、局部沖突和動蕩頻發、全球性問題加劇的外部環境,面對我國經濟發展進入新常態等一系列深刻變化,我們堅持穩中求進工作總基調,迎難而上,開拓進取,取得了改革開放和社會主義現代化建設的歷史性成就。' \u'為貫徹十八大精神,黨中央召開七次全會,分別就政府機構改革和職能轉變、全面深化改革、全面推進依法治國、制定“十三五”規劃、全面從嚴治黨等重大問題作出決定和部署。五年來,我們統籌推進“五位一體”總體布局、協調推進“四個全面”戰略布局,“十二五”規劃勝利完成,“十三五”規劃順利實施,黨和國家事業全面開創新局面。' \u'經濟建設取得重大成就。堅定不移貫徹新發展理念,堅決端正發展觀念、轉變發展方式,發展質量和效益不斷提升。經濟保持中高速增長,在世界主要國家中名列前茅,國內生產總值從五十四萬億元增長到八十萬億元,穩居世界第二,對世界經濟增長貢獻率超過百分之三十。供給側結構性改革深入推進,經濟結構不斷優化,數字經濟等新興產業蓬勃發展,高鐵、公路、橋梁、港口、機場等基礎設施建設快速推進。農業現代化穩步推進,糧食生產能力達到一萬二千億斤。城鎮化率年均提高一點二個百分點,八千多萬農業轉移人口成為城鎮居民。區域發展協調性增強,“一帶一路”建設、京津冀協同發展、長江經濟帶發展成效顯著。創新驅動發展戰略大力實施,創新型國家建設成果豐碩,天宮、蛟龍、天眼、悟空、墨子、大飛機等重大科技成果相繼問世。南海島礁建設積極推進。開放型經濟新體制逐步健全,對外貿易、對外投資、外匯儲備穩居世界前列。' \u'全面深化改革取得重大突破。蹄疾步穩推進全面深化改革,堅決破除各方面體制機制弊端。改革全面發力、多點突破、縱深推進,著力增強改革系統性、整體性、協同性,壓茬拓展改革廣度和深度,推出一千五百多項改革舉措,重要領域和關鍵環節改革取得突破性進展,主要領域改革主體框架基本確立。中國特色社會主義制度更加完善,國家治理體系和治理能力現代化水平明顯提高,全社會發展活力和創新活力明顯增強。'# txt ='The information disclosed by the Film Funds Office of the State Administration of Press, Publication, Radio, Film and Television shows that, the total box office in China amounted to nearly 3 billion yuan during the first six days of the lunar year (February 8 - 13), an increase of 67% compared to the 1.797 billion yuan in the Chinese Spring Festival period in 2015, becoming the "Best Chinese Spring Festival Period in History".' \# 'During the Chinese Spring Festival period, "The Mermaid" contributed to a box office of 1.46 billion yuan. "The Man From Macau III" reached a box office of 680 million yuan. "The Journey to the West: The Monkey King 2" had a box office of 650 million yuan. "Kung Fu Panda 3" also had a box office of exceeding 130 million. These four blockbusters together contributed more than 95% of the total box office during the Chinese Spring Festival period.' \# 'There were many factors contributing to the popularity during the Chinese Spring Festival period. Apparently, the overall popular film market with good box office was driven by the emergence of a few blockbusters. In fact, apart from the appeal of the films, other factors like film ticket subsidy of online seat-selection companies, cinema channel sinking and the film-viewing heat in the middle and small cities driven by the home-returning wave were all main factors contributing to this blowout. A management of Shanghai Film Group told the 21st Century Business Herald.'print txtprint "--"obj.summaryScoredtxt(txt)print "----"obj.summaryTopNtxt(txt)

?

java實現代碼:

//import com.hankcs.hanlp.HanLP;import java.io.BufferedReader; import java.io.InputStreamReader; import java.io.FileInputStream; import java.io.IOException; import java.io.Reader; import java.io.StringReader; import java.util.ArrayList; import java.util.Collections; import java.util.Comparator; import java.util.HashMap; import java.util.HashSet; import java.util.LinkedHashMap; import java.util.List; import java.util.Map; import java.util.Map.Entry; import java.util.PriorityQueue; import java.util.Queue; import java.util.Set; import java.util.TreeMap; import java.util.regex.Pattern; import java.util.regex.Matcher;//import com.hankcs.hanlp.seg.common.Term; import org.wltea.analyzer.core.IKSegmenter; import org.wltea.analyzer.core.Lexeme; /*** @Author:sks* @Description:文本摘要提取文中重要的關鍵句子,使用top-n關鍵詞在句子中的比例關系* 返回過濾句子方法為:1.均值標準差,2.top-n句子,3.最大邊緣相關top-n句子* @Date:Created in 16:40 2017/12/22* @Modified by:**/ public class NewsSummary {//保留關鍵詞數量int N = 50;//關鍵詞間的距離閥值int CLUSTER_THRESHOLD = 5;//前top-n句子int TOP_SENTENCES = 10;//最大邊緣相關閥值double λ = 0.4;//句子得分使用方法final Set<String> styleSet = new HashSet<String>();//停用詞列表Set<String> stopWords = new HashSet<String>();//句子編號及分詞列表Map<Integer,List<String>> sentSegmentWords = null;public NewsSummary(){this.loadStopWords("D:\\work\\Solr\\solr-python\\CNstopwords.txt");styleSet.add("meanstd");styleSet.add("default");styleSet.add("MMR");}/*** 加載停詞* @param path*/private void loadStopWords(String path){BufferedReader br=null;try{InputStreamReader reader = new InputStreamReader(new FileInputStream(path),"utf-8");br = new BufferedReader(reader);String line=null;while((line=br.readLine())!=null){stopWords.add(line);}br.close();}catch(IOException e){e.printStackTrace();}}/*** @Author:sks* @Description:利用正則將文本拆分成句子* @Date:*/private List<String> SplitSentences(String text){List<String> sentences = new ArrayList<String>();String regEx = "[!?。!?.]";Pattern p = Pattern.compile(regEx);String[] sents = p.split(text);Matcher m = p.matcher(text);int sentsLen = sents.length;if(sentsLen>0){ //把每個句子的末尾標點符號加上int index = 0;while(index < sentsLen){if(m.find()){sents[index] += m.group();}index++;}}for(String sentence:sents){//文章從網頁上拷貝過來后遺留下來的沒有處理掉的html的標志sentence=sentence.replaceAll("(”|“|—|‘|’|·|"|↓|•)", "");sentences.add(sentence.trim());}return sentences;}/*** 這里使用IK進行分詞* @param text* @return*/private List<String> IKSegment(String text){List<String> wordList = new ArrayList<String>();Reader reader = new StringReader(text);IKSegmenter ik = new IKSegmenter(reader,true);Lexeme lex = null;try {while((lex=ik.next())!=null){String word=lex.getLexemeText();if(word.equals("nbsp") || this.stopWords.contains(word))continue;if(word.length()>1 && word!="\t")wordList.add(word);}} catch (IOException e) {e.printStackTrace();}return wordList;}/*** 每個句子得分 (keywordsLen*keywordsLen/totalWordsLen)* @param sentences 分句* @param topnWords keywords top-n關鍵詞* @return*/private Map<Integer,Double> scoreSentences(List<String> sentences,List<String> topnWords){Map<Integer, Double> scoresMap=new LinkedHashMap<Integer,Double>();//句子編號,得分sentSegmentWords=new HashMap<Integer,List<String>>();int sentence_idx=-1;//句子編號for(String sentence:sentences){sentence_idx+=1;List<String> words=this.IKSegment(sentence);//對每個句子分詞 // List<String> words= HanLP.segment(sentence); sentSegmentWords.put(sentence_idx, words);List<Integer> word_idx=new ArrayList<Integer>();//每個關詞鍵在本句子中的位置for(String word:topnWords){if(words.contains(word)){word_idx.add(words.indexOf(word));}elsecontinue;}if(word_idx.size()==0)continue;Collections.sort(word_idx);//對于兩個連續的單詞,利用單詞位置索引,通過距離閥值計算一個族List<List<Integer>> clusters=new ArrayList<List<Integer>>();//根據本句中的關鍵詞的距離存放多個詞族List<Integer> cluster=new ArrayList<Integer>();cluster.add(word_idx.get(0));int i=1;while(i<word_idx.size()){if((word_idx.get(i)-word_idx.get(i-1))<this.CLUSTER_THRESHOLD)cluster.add(word_idx.get(i));else{clusters.add(cluster);cluster=new ArrayList<Integer>();cluster.add(word_idx.get(i));}i+=1;}clusters.add(cluster);//對每個詞族打分,選擇最高得分作為本句的得分double max_cluster_score=0.0;for(List<Integer> clu:clusters){int keywordsLen=clu.size();//關鍵詞個數int totalWordsLen=clu.get(keywordsLen-1)-clu.get(0)+1;//總的詞數double score=1.0*keywordsLen*keywordsLen/totalWordsLen;if(score>max_cluster_score)max_cluster_score=score;}scoresMap.put(sentence_idx,max_cluster_score);}return scoresMap;}/*** @Author:sks* @Description:利用均值方差自動文摘* @Date:*/public String SummaryMeanstdTxt(String text){//將文本拆分成句子列表List<String> sentencesList = this.SplitSentences(text);//利用IK分詞組件將文本分詞,返回分詞列表List<String> words = this.IKSegment(text); // List<Term> words1= HanLP.segment(text);//統計分詞頻率Map<String,Integer> wordsMap = new HashMap<String,Integer>();for(String word:words){Integer val = wordsMap.get(word);wordsMap.put(word,val == null ? 1: val + 1);}//使用優先隊列自動排序Queue<Map.Entry<String, Integer>> wordsQueue=new PriorityQueue<Map.Entry<String,Integer>>(wordsMap.size(),new Comparator<Map.Entry<String,Integer>>(){// @Overridepublic int compare(Entry<String, Integer> o1,Entry<String, Integer> o2) {return o2.getValue()-o1.getValue();}});wordsQueue.addAll(wordsMap.entrySet());if( N > wordsMap.size())N = wordsQueue.size();//取前N個頻次最高的詞存在wordsListList<String> wordsList = new ArrayList<String>(N);//top-n關鍵詞for(int i = 0;i < N;i++){Entry<String,Integer> entry= wordsQueue.poll();wordsList.add(entry.getKey());}//利用頻次關鍵字,給句子打分,并對打分后句子列表依據得分大小降序排序Map<Integer,Double> scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,從第一句開始,句子編號的自然順序//approach1,利用均值和標準差過濾非重要句子Map<Integer,String> keySentence = new LinkedHashMap<Integer,String>();//句子得分均值double sentenceMean = 0.0;for(double value:scoresLinkedMap.values()){sentenceMean += value;}sentenceMean /= scoresLinkedMap.size();//句子得分標準差double sentenceStd=0.0;for(Double score:scoresLinkedMap.values()){sentenceStd += Math.pow((score-sentenceMean), 2);}sentenceStd = Math.sqrt(sentenceStd / scoresLinkedMap.size());for(Map.Entry<Integer, Double> entry:scoresLinkedMap.entrySet()){//過濾低分句子if(entry.getValue()>(sentenceMean+0.5*sentenceStd))keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));}StringBuilder sb = new StringBuilder();for(int index:keySentence.keySet())sb.append(keySentence.get(index));return sb.toString();}/*** @Author:sks* @Description:默認返回排序得分top-n句子* @Date:*/public String SummaryTopNTxt(String text){//將文本拆分成句子列表List<String> sentencesList = this.SplitSentences(text);//利用IK分詞組件將文本分詞,返回分詞列表List<String> words = this.IKSegment(text); // List<Term> words1= HanLP.segment(text);//統計分詞頻率Map<String,Integer> wordsMap = new HashMap<String,Integer>();for(String word:words){Integer val = wordsMap.get(word);wordsMap.put(word,val == null ? 1: val + 1);}//使用優先隊列自動排序Queue<Map.Entry<String, Integer>> wordsQueue=new PriorityQueue<Map.Entry<String,Integer>>(wordsMap.size(),new Comparator<Map.Entry<String,Integer>>(){// @Overridepublic int compare(Entry<String, Integer> o1,Entry<String, Integer> o2) {return o2.getValue()-o1.getValue();}});wordsQueue.addAll(wordsMap.entrySet());if( N > wordsMap.size())N = wordsQueue.size();//取前N個頻次最高的詞存在wordsListList<String> wordsList = new ArrayList<String>(N);//top-n關鍵詞for(int i = 0;i < N;i++){Entry<String,Integer> entry= wordsQueue.poll();wordsList.add(entry.getKey());}//利用頻次關鍵字,給句子打分,并對打分后句子列表依據得分大小降序排序Map<Integer,Double> scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,從第一句開始,句子編號的自然順序List<Map.Entry<Integer, Double>> sortedSentList = new ArrayList<Map.Entry<Integer,Double>>(scoresLinkedMap.entrySet());//按得分從高到底排序好的句子,句子編號與得分//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");Collections.sort(sortedSentList, new Comparator<Map.Entry<Integer, Double>>(){// @Overridepublic int compare(Entry<Integer, Double> o1,Entry<Integer, Double> o2) {return o2.getValue() == o1.getValue() ? 0 :(o2.getValue() > o1.getValue() ? 1 : -1);}});//approach2,默認返回排序得分top-n句子Map<Integer,String> keySentence = new TreeMap<Integer,String>();int count = 0;for(Map.Entry<Integer, Double> entry:sortedSentList){count++;keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));if(count == this.TOP_SENTENCES)break;}StringBuilder sb=new StringBuilder();for(int index:keySentence.keySet())sb.append(keySentence.get(index));return sb.toString();}/*** @Author:sks* @Description:利用最大邊緣相關自動文摘* @Date:*/public String SummaryMMRNTxt(String text){//將文本拆分成句子列表List<String> sentencesList = this.SplitSentences(text);//利用IK分詞組件將文本分詞,返回分詞列表List<String> words = this.IKSegment(text); // List<Term> words1= HanLP.segment(text);//統計分詞頻率Map<String,Integer> wordsMap = new HashMap<String,Integer>();for(String word:words){Integer val = wordsMap.get(word);wordsMap.put(word,val == null ? 1: val + 1);}//使用優先隊列自動排序Queue<Map.Entry<String, Integer>> wordsQueue=new PriorityQueue<Map.Entry<String,Integer>>(wordsMap.size(),new Comparator<Map.Entry<String,Integer>>(){// @Overridepublic int compare(Entry<String, Integer> o1,Entry<String, Integer> o2) {return o2.getValue()-o1.getValue();}});wordsQueue.addAll(wordsMap.entrySet());if( N > wordsMap.size())N = wordsQueue.size();//取前N個頻次最高的詞存在wordsListList<String> wordsList = new ArrayList<String>(N);//top-n關鍵詞for(int i = 0;i < N;i++){Entry<String,Integer> entry= wordsQueue.poll();wordsList.add(entry.getKey());}//利用頻次關鍵字,給句子打分,并對打分后句子列表依據得分大小降序排序Map<Integer,Double> scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,從第一句開始,句子編號的自然順序List<Map.Entry<Integer, Double>> sortedSentList = new ArrayList<Map.Entry<Integer,Double>>(scoresLinkedMap.entrySet());//按得分從高到底排序好的句子,句子編號與得分//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");Collections.sort(sortedSentList, new Comparator<Map.Entry<Integer, Double>>(){// @Overridepublic int compare(Entry<Integer, Double> o1,Entry<Integer, Double> o2) {return o2.getValue() == o1.getValue() ? 0 :(o2.getValue() > o1.getValue() ? 1 : -1);}});//approach3,利用最大邊緣相關,返回前top-n句子if(sentencesList.size()==2){return sentencesList.get(0)+sentencesList.get(1);}else if(sentencesList.size()==1)return sentencesList.get(0);Map<Integer,String> keySentence = new TreeMap<Integer,String>();int count = 0;Map<Integer,Double> MMR_SentScore = MMR(sortedSentList);for(Map.Entry<Integer, Double> entry:MMR_SentScore.entrySet()){count++;int sentIndex=entry.getKey();String sentence=sentencesList.get(sentIndex);keySentence.put(sentIndex, sentence);if(count==this.TOP_SENTENCES)break;}StringBuilder sb=new StringBuilder();for(int index:keySentence.keySet())sb.append(keySentence.get(index));return sb.toString();}/*** 計算文本摘要* @param text* @param style(meanstd,default,MMR)* @return*/public String summarize(String text,String style){try {if(!styleSet.contains(style) || text.trim().equals(""))throw new IllegalArgumentException("方法 summarize(String text,String style)中text不能為空,style必須是meanstd、default或者MMR");} catch (Exception e) {// TODO Auto-generated catch block e.printStackTrace();System.exit(1);}//將文本拆分成句子列表List<String> sentencesList = this.SplitSentences(text);//利用IK分詞組件將文本分詞,返回分詞列表List<String> words = this.IKSegment(text); // List<Term> words1= HanLP.segment(text);//統計分詞頻率Map<String,Integer> wordsMap = new HashMap<String,Integer>();for(String word:words){Integer val = wordsMap.get(word);wordsMap.put(word,val == null ? 1: val + 1);}//使用優先隊列自動排序Queue<Map.Entry<String, Integer>> wordsQueue=new PriorityQueue<Map.Entry<String,Integer>>(wordsMap.size(),new Comparator<Map.Entry<String,Integer>>(){ // @Overridepublic int compare(Entry<String, Integer> o1,Entry<String, Integer> o2) {return o2.getValue()-o1.getValue();}});wordsQueue.addAll(wordsMap.entrySet());if( N > wordsMap.size())N = wordsQueue.size();//取前N個頻次最高的詞存在wordsListList<String> wordsList = new ArrayList<String>(N);//top-n關鍵詞for(int i = 0;i < N;i++){Entry<String,Integer> entry= wordsQueue.poll();wordsList.add(entry.getKey());}//利用頻次關鍵字,給句子打分,并對打分后句子列表依據得分大小降序排序Map<Integer,Double> scoresLinkedMap = scoreSentences(sentencesList,wordsList);//返回的得分,從第一句開始,句子編號的自然順序 Map<Integer,String> keySentence=null;//approach1,利用均值和標準差過濾非重要句子if(style.equals("meanstd")){keySentence = new LinkedHashMap<Integer,String>();//句子得分均值double sentenceMean = 0.0;for(double value:scoresLinkedMap.values()){sentenceMean += value;}sentenceMean /= scoresLinkedMap.size();//句子得分標準差double sentenceStd=0.0;for(Double score:scoresLinkedMap.values()){sentenceStd += Math.pow((score-sentenceMean), 2);}sentenceStd = Math.sqrt(sentenceStd / scoresLinkedMap.size());for(Map.Entry<Integer, Double> entry:scoresLinkedMap.entrySet()){//過濾低分句子if(entry.getValue()>(sentenceMean+0.5*sentenceStd))keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));}}List<Map.Entry<Integer, Double>> sortedSentList = new ArrayList<Map.Entry<Integer,Double>>(scoresLinkedMap.entrySet());//按得分從高到底排序好的句子,句子編號與得分//System.setProperty("java.util.Arrays.useLegacyMergeSort", "true");Collections.sort(sortedSentList, new Comparator<Map.Entry<Integer, Double>>(){// @Overridepublic int compare(Entry<Integer, Double> o1,Entry<Integer, Double> o2) {return o2.getValue() == o1.getValue() ? 0 :(o2.getValue() > o1.getValue() ? 1 : -1);}});//approach2,默認返回排序得分top-n句子if(style.equals("default")){keySentence = new TreeMap<Integer,String>();int count = 0;for(Map.Entry<Integer, Double> entry:sortedSentList){count++;keySentence.put(entry.getKey(), sentencesList.get(entry.getKey()));if(count == this.TOP_SENTENCES)break;}}//approach3,利用最大邊緣相關,返回前top-n句子if(style.equals("MMR")){if(sentencesList.size()==2){return sentencesList.get(0)+sentencesList.get(1);}else if(sentencesList.size()==1)return sentencesList.get(0);keySentence = new TreeMap<Integer,String>();int count = 0;Map<Integer,Double> MMR_SentScore = MMR(sortedSentList);for(Map.Entry<Integer, Double> entry:MMR_SentScore.entrySet()){count++;int sentIndex=entry.getKey();String sentence=sentencesList.get(sentIndex);keySentence.put(sentIndex, sentence);if(count==this.TOP_SENTENCES)break;}}StringBuilder sb=new StringBuilder();for(int index:keySentence.keySet())sb.append(keySentence.get(index));//System.out.println("summarize out...");return sb.toString();}/*** 最大邊緣相關(Maximal Marginal Relevance),根據λ調節準確性和多樣性* max[λ*score(i) - (1-λ)*max[similarity(i,j)]]:score(i)句子的得分,similarity(i,j)句子i與j的相似度* User-tunable diversity through λ parameter* - High λ= Higher accuracy* - Low λ= Higher diversity* @param sortedSentList 排好序的句子,編號及得分* @return*/private Map<Integer,Double> MMR(List<Map.Entry<Integer, Double>> sortedSentList){//System.out.println("MMR In...");double[][] simSentArray=sentJSimilarity();//所有句子的相似度Map<Integer,Double> sortedLinkedSent=new LinkedHashMap<Integer,Double>();for(Map.Entry<Integer, Double> entry:sortedSentList){sortedLinkedSent.put(entry.getKey(),entry.getValue());}Map<Integer,Double> MMR_SentScore=new LinkedHashMap<Integer,Double>();//最終的得分(句子編號與得分)Map.Entry<Integer, Double> Entry=sortedSentList.get(0);//第一步先將最高分的句子加入 MMR_SentScore.put(Entry.getKey(), Entry.getValue());boolean flag=true;while(flag){int index=0;double maxScore=Double.NEGATIVE_INFINITY;//通過迭代計算獲得最高分句子for(Map.Entry<Integer, Double> entry:sortedLinkedSent.entrySet()){if(MMR_SentScore.containsKey(entry.getKey())) continue;double simSentence=0.0;for(Map.Entry<Integer, Double> MMREntry:MMR_SentScore.entrySet()){//這個是獲得最相似的那個句子的最大相似值double simSen=0.0;if(entry.getKey()>MMREntry.getKey())simSen=simSentArray[MMREntry.getKey()][entry.getKey()];elsesimSen=simSentArray[entry.getKey()][MMREntry.getKey()];if(simSen>simSentence){simSentence=simSen;}}simSentence=λ*entry.getValue()-(1-λ)*simSentence;if(simSentence>maxScore){maxScore=simSentence;index=entry.getKey();//句子編號 }}MMR_SentScore.put(index, maxScore);if(MMR_SentScore.size()==sortedLinkedSent.size())flag=false;}//System.out.println("MMR out...");return MMR_SentScore;}/*** 每個句子的相似度,這里使用簡單的jaccard方法,計算所有句子的兩兩相似度* @return*/private double[][] sentJSimilarity(){//System.out.println("sentJSimilarity in...");int size=sentSegmentWords.size();double[][] simSent=new double[size][size];for(Map.Entry<Integer, List<String>> entry:sentSegmentWords.entrySet()){for(Map.Entry<Integer, List<String>> entry1:sentSegmentWords.entrySet()){if(entry.getKey()>=entry1.getKey()) continue;int commonWords=0;double sim=0.0;for(String entryStr:entry.getValue()){if(entry1.getValue().contains(entryStr))commonWords++;}sim=1.0*commonWords/(entry.getValue().size()+entry1.getValue().size()-commonWords);simSent[entry.getKey()][entry1.getKey()]=sim;}}//System.out.println("sentJSimilarity out...");return simSent;}public static void main(String[] args){NewsSummary summary=new NewsSummary();String text="我國古代歷史演義小說的代表作。明代小說家羅貫中依據有關三國的歷史、雜記,在廣泛吸取民間傳說和民間藝人創作成果的基礎上,加工、再創作了這部長篇章回小說。" +"作品寫的是漢末到晉初這一歷史時期魏、蜀、吳三個封建統治集團間政治、軍事、外交等各方面的復雜斗爭。通過這些描寫,揭露了社會的黑暗與腐朽,譴責了統治階級的殘暴與奸詐," +"反映了人民在動亂時代的苦難和明君仁政的愿望。小說也反映了作者對農民起義的偏見,以及因果報應和宿命論等思想。戰爭描寫是《三國演義》突出的藝術成就。" +"這部小說通過驚心動魄的軍事、政治斗爭,運用夸張、對比、烘托、渲染等藝術手法,成功地塑造了諸葛亮、曹操、關羽、張飛等一批鮮明、生動的人物形象。" +"《三國演義》結構宏偉而又嚴密精巧,語言簡潔、明快、生動。有的評論認為這部作品在藝術上的不足之處是人物性格缺乏發展變化,有的人物渲染夸張過分導致失真。" +"《三國演義》標志著歷史演義小說的輝煌成就。在傳播政治、軍事斗爭經驗、推動歷史演義創作的繁榮等方面都起過積極作用。" +"《三國演義》的版本主要有明嘉靖刻本《三國志通俗演義》和清毛宗崗增刪評點的《三國志演義》";String keySentences=summary.SummaryMeanstdTxt(text);System.out.println("summary: "+keySentences);String topSentences=summary.SummaryTopNTxt(text);System.out.println("summary: "+topSentences);String mmrSentences=summary.SummaryMMRNTxt(text);System.out.println("summary: "+mmrSentences);} }

? 另外也可以引用漢語語言處理包,該包里面有摘要的方法

import com.hankcs.hanlp.HanLP;

import com.hankcs.hanlp.corpus.tag.Nature;

import com.hankcs.hanlp.seg.common.Term;

//摘要,200是摘要的最大長度

String summary = HanLP.getSummary(txt,200);

引用hanlp-portable-1.5.2.jar和hanlp-solr-plugin-1.1.2.jar

![[Cacti] cacti监控mongodb性能实战](http://blog.itpub.net/attachment/201410/5/26230597_1412496772lg39.png)